This the multi-page printable view of this section. Click here to print.

Administer a Cluster

- 1: Administration with kubeadm

- 1.1: Certificate Management with kubeadm

- 1.2: Configuring a cgroup driver

- 1.3: Upgrading kubeadm clusters

- 1.4: Adding Windows nodes

- 1.5: Upgrading Windows nodes

- 2: Migrating from dockershim

- 2.1: Check whether Dockershim deprecation affects you

- 2.2: Migrating telemetry and security agents from dockershim

- 3: Certificates

- 4: Manage Memory, CPU, and API Resources

- 4.1: Configure Default Memory Requests and Limits for a Namespace

- 4.2: Configure Default CPU Requests and Limits for a Namespace

- 4.3: Configure Minimum and Maximum Memory Constraints for a Namespace

- 4.4: Configure Minimum and Maximum CPU Constraints for a Namespace

- 4.5: Configure Memory and CPU Quotas for a Namespace

- 4.6: Configure a Pod Quota for a Namespace

- 5: Install a Network Policy Provider

- 5.1: Use Antrea for NetworkPolicy

- 5.2: Use Calico for NetworkPolicy

- 5.3: Use Cilium for NetworkPolicy

- 5.4: Use Kube-router for NetworkPolicy

- 5.5: Romana for NetworkPolicy

- 5.6: Weave Net for NetworkPolicy

- 6: Access Clusters Using the Kubernetes API

- 7: Access Services Running on Clusters

- 8: Advertise Extended Resources for a Node

- 9: Autoscale the DNS Service in a Cluster

- 10: Change the default StorageClass

- 11: Change the Reclaim Policy of a PersistentVolume

- 12: Cloud Controller Manager Administration

- 13: Configure Quotas for API Objects

- 14: Control CPU Management Policies on the Node

- 15: Control Topology Management Policies on a node

- 16: Customizing DNS Service

- 17: Debugging DNS Resolution

- 18: Declare Network Policy

- 19: Developing Cloud Controller Manager

- 20: Enable Or Disable A Kubernetes API

- 21: Enabling Service Topology

- 22: Enabling Topology Aware Hints

- 23: Encrypting Secret Data at Rest

- 24: Guaranteed Scheduling For Critical Add-On Pods

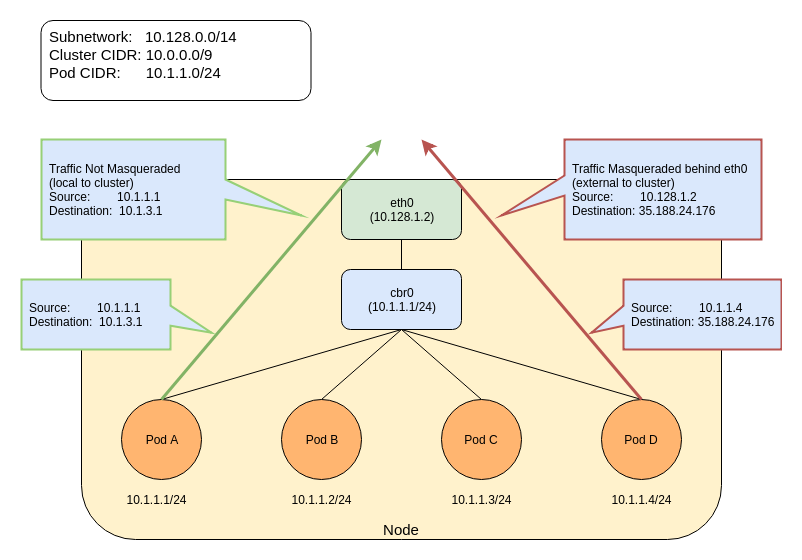

- 25: IP Masquerade Agent User Guide

- 26: Limit Storage Consumption

- 27: Migrate Replicated Control Plane To Use Cloud Controller Manager

- 28: Namespaces Walkthrough

- 29: Operating etcd clusters for Kubernetes

- 30: Reconfigure a Node's Kubelet in a Live Cluster

- 31: Reserve Compute Resources for System Daemons

- 32: Running Kubernetes Node Components as a Non-root User

- 33: Safely Drain a Node

- 34: Securing a Cluster

- 35: Set Kubelet parameters via a config file

- 36: Set up a High-Availability Control Plane

- 37: Share a Cluster with Namespaces

- 38: Upgrade A Cluster

- 39: Use Cascading Deletion in a Cluster

- 40: Using a KMS provider for data encryption

- 41: Using CoreDNS for Service Discovery

- 42: Using NodeLocal DNSCache in Kubernetes clusters

- 43: Using sysctls in a Kubernetes Cluster

- 44: Utilizing the NUMA-aware Memory Manager

1 - Administration with kubeadm

1.1 - Certificate Management with kubeadm

Kubernetes v1.15 [stable]

Client certificates generated by kubeadm expire after 1 year. This page explains how to manage certificate renewals with kubeadm.

Before you begin

You should be familiar with PKI certificates and requirements in Kubernetes.

Using custom certificates

By default, kubeadm generates all the certificates needed for a cluster to run. You can override this behavior by providing your own certificates.

To do so, you must place them in whatever directory is specified by the

--cert-dir flag or the certificatesDir field of kubeadm's ClusterConfiguration.

By default this is /etc/kubernetes/pki.

If a given certificate and private key pair exists before running kubeadm init,

kubeadm does not overwrite them. This means you can, for example, copy an existing

CA into /etc/kubernetes/pki/ca.crt and /etc/kubernetes/pki/ca.key,

and kubeadm will use this CA for signing the rest of the certificates.

External CA mode

It is also possible to provide only the ca.crt file and not the

ca.key file (this is only available for the root CA file, not other cert pairs).

If all other certificates and kubeconfig files are in place, kubeadm recognizes

this condition and activates the "External CA" mode. kubeadm will proceed without the

CA key on disk.

Instead, run the controller-manager standalone with --controllers=csrsigner and

point to the CA certificate and key.

PKI certificates and requirements includes guidance on setting up a cluster to use an external CA.

Check certificate expiration

You can use the check-expiration subcommand to check when certificates expire:

kubeadm certs check-expiration

The output is similar to this:

CERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGED

admin.conf Dec 30, 2020 23:36 UTC 364d no

apiserver Dec 30, 2020 23:36 UTC 364d ca no

apiserver-etcd-client Dec 30, 2020 23:36 UTC 364d etcd-ca no

apiserver-kubelet-client Dec 30, 2020 23:36 UTC 364d ca no

controller-manager.conf Dec 30, 2020 23:36 UTC 364d no

etcd-healthcheck-client Dec 30, 2020 23:36 UTC 364d etcd-ca no

etcd-peer Dec 30, 2020 23:36 UTC 364d etcd-ca no

etcd-server Dec 30, 2020 23:36 UTC 364d etcd-ca no

front-proxy-client Dec 30, 2020 23:36 UTC 364d front-proxy-ca no

scheduler.conf Dec 30, 2020 23:36 UTC 364d no

CERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

ca Dec 28, 2029 23:36 UTC 9y no

etcd-ca Dec 28, 2029 23:36 UTC 9y no

front-proxy-ca Dec 28, 2029 23:36 UTC 9y no

The command shows expiration/residual time for the client certificates in the /etc/kubernetes/pki folder and for the client certificate embedded in the KUBECONFIG files used by kubeadm (admin.conf, controller-manager.conf and scheduler.conf).

Additionally, kubeadm informs the user if the certificate is externally managed; in this case, the user should take care of managing certificate renewal manually/using other tools.

Warning:kubeadmcannot manage certificates signed by an external CA.

Note:kubelet.confis not included in the list above because kubeadm configures kubelet for automatic certificate renewal with rotatable certificates under/var/lib/kubelet/pki. To repair an expired kubelet client certificate see Kubelet client certificate rotation fails.

Warning:On nodes created with

kubeadm init, prior to kubeadm version 1.17, there is a bug where you manually have to modify the contents ofkubelet.conf. Afterkubeadm initfinishes, you should updatekubelet.confto point to the rotated kubelet client certificates, by replacingclient-certificate-dataandclient-key-datawith:client-certificate: /var/lib/kubelet/pki/kubelet-client-current.pem client-key: /var/lib/kubelet/pki/kubelet-client-current.pem

Automatic certificate renewal

kubeadm renews all the certificates during control plane upgrade.

This feature is designed for addressing the simplest use cases; if you don't have specific requirements on certificate renewal and perform Kubernetes version upgrades regularly (less than 1 year in between each upgrade), kubeadm will take care of keeping your cluster up to date and reasonably secure.

Note: It is a best practice to upgrade your cluster frequently in order to stay secure.

If you have more complex requirements for certificate renewal, you can opt out from the default behavior by passing --certificate-renewal=false to kubeadm upgrade apply or to kubeadm upgrade node.

Warning: Prior to kubeadm version 1.17 there is a bug where the default value for--certificate-renewalisfalsefor thekubeadm upgrade nodecommand. In that case, you should explicitly set--certificate-renewal=true.

Manual certificate renewal

You can renew your certificates manually at any time with the kubeadm certs renew command.

This command performs the renewal using CA (or front-proxy-CA) certificate and key stored in /etc/kubernetes/pki.

After running the command you should restart the control plane Pods. This is required since

dynamic certificate reload is currently not supported for all components and certificates.

Static Pods are managed by the local kubelet

and not by the API Server, thus kubectl cannot be used to delete and restart them.

To restart a static Pod you can temporarily remove its manifest file from /etc/kubernetes/manifests/

and wait for 20 seconds (see the fileCheckFrequency value in KubeletConfiguration struct.

The kubelet will terminate the Pod if it's no longer in the manifest directory.

You can then move the file back and after another fileCheckFrequency period, the kubelet will recreate

the Pod and the certificate renewal for the component can complete.

Warning: If you are running an HA cluster, this command needs to be executed on all the control-plane nodes.

Note:certs renewuses the existing certificates as the authoritative source for attributes (Common Name, Organization, SAN, etc.) instead of the kubeadm-config ConfigMap. It is strongly recommended to keep them both in sync.

kubeadm certs renew provides the following options:

The Kubernetes certificates normally reach their expiration date after one year.

-

--csr-onlycan be used to renew certificates with an external CA by generating certificate signing requests (without actually renewing certificates in place); see next paragraph for more information. -

It's also possible to renew a single certificate instead of all.

Renew certificates with the Kubernetes certificates API

This section provides more details about how to execute manual certificate renewal using the Kubernetes certificates API.

Caution: These are advanced topics for users who need to integrate their organization's certificate infrastructure into a kubeadm-built cluster. If the default kubeadm configuration satisfies your needs, you should let kubeadm manage certificates instead.

Set up a signer

The Kubernetes Certificate Authority does not work out of the box. You can configure an external signer such as cert-manager, or you can use the built-in signer.

The built-in signer is part of kube-controller-manager.

To activate the built-in signer, you must pass the --cluster-signing-cert-file and --cluster-signing-key-file flags.

If you're creating a new cluster, you can use a kubeadm configuration file:

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

controllerManager:

extraArgs:

cluster-signing-cert-file: /etc/kubernetes/pki/ca.crt

cluster-signing-key-file: /etc/kubernetes/pki/ca.key

Create certificate signing requests (CSR)

See Create CertificateSigningRequest for creating CSRs with the Kubernetes API.

Renew certificates with external CA

This section provide more details about how to execute manual certificate renewal using an external CA.

To better integrate with external CAs, kubeadm can also produce certificate signing requests (CSRs). A CSR represents a request to a CA for a signed certificate for a client. In kubeadm terms, any certificate that would normally be signed by an on-disk CA can be produced as a CSR instead. A CA, however, cannot be produced as a CSR.

Create certificate signing requests (CSR)

You can create certificate signing requests with kubeadm certs renew --csr-only.

Both the CSR and the accompanying private key are given in the output.

You can pass in a directory with --csr-dir to output the CSRs to the specified location.

If --csr-dir is not specified, the default certificate directory (/etc/kubernetes/pki) is used.

Certificates can be renewed with kubeadm certs renew --csr-only.

As with kubeadm init, an output directory can be specified with the --csr-dir flag.

A CSR contains a certificate's name, domains, and IPs, but it does not specify usages. It is the responsibility of the CA to specify the correct cert usages when issuing a certificate.

- In

opensslthis is done with theopenssl cacommand. - In

cfsslyou specify usages in the config file.

After a certificate is signed using your preferred method, the certificate and the private key must be copied to the PKI directory (by default /etc/kubernetes/pki).

Certificate authority (CA) rotation

Kubeadm does not support rotation or replacement of CA certificates out of the box.

For more information about manual rotation or replacement of CA, see manual rotation of CA certificates.

Enabling signed kubelet serving certificates

By default the kubelet serving certificate deployed by kubeadm is self-signed. This means a connection from external services like the metrics-server to a kubelet cannot be secured with TLS.

To configure the kubelets in a new kubeadm cluster to obtain properly signed serving

certificates you must pass the following minimal configuration to kubeadm init:

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

serverTLSBootstrap: true

If you have already created the cluster you must adapt it by doing the following:

- Find and edit the

kubelet-config-1.22ConfigMap in thekube-systemnamespace. In that ConfigMap, thekubeletkey has a KubeletConfiguration document as its value. Edit the KubeletConfiguration document to setserverTLSBootstrap: true. - On each node, add the

serverTLSBootstrap: truefield in/var/lib/kubelet/config.yamland restart the kubelet withsystemctl restart kubelet

The field serverTLSBootstrap: true will enable the bootstrap of kubelet serving

certificates by requesting them from the certificates.k8s.io API. One known limitation

is that the CSRs (Certificate Signing Requests) for these certificates cannot be automatically

approved by the default signer in the kube-controller-manager -

kubernetes.io/kubelet-serving.

This will require action from the user or a third party controller.

These CSRs can be viewed using:

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-9wvgt 112s kubernetes.io/kubelet-serving system:node:worker-1 Pending

csr-lz97v 1m58s kubernetes.io/kubelet-serving system:node:control-plane-1 Pending

To approve them you can do the following:

kubectl certificate approve <CSR-name>

By default, these serving certificate will expire after one year. Kubeadm sets the

KubeletConfiguration field rotateCertificates to true, which means that close

to expiration a new set of CSRs for the serving certificates will be created and must

be approved to complete the rotation. To understand more see

Certificate Rotation.

If you are looking for a solution for automatic approval of these CSRs it is recommended that you contact your cloud provider and ask if they have a CSR signer that verifies the node identity with an out of band mechanism.

Caution: This section links to third party projects that provide functionality required by Kubernetes. The Kubernetes project authors aren't responsible for these projects. This page follows CNCF website guidelines by listing projects alphabetically. To add a project to this list, read the content guide before submitting a change.

Third party custom controllers can be used:

Such a controller is not a secure mechanism unless it not only verifies the CommonName in the CSR but also verifies the requested IPs and domain names. This would prevent a malicious actor that has access to a kubelet client certificate to create CSRs requesting serving certificates for any IP or domain name.

1.2 - Configuring a cgroup driver

This page explains how to configure the kubelet cgroup driver to match the container runtime cgroup driver for kubeadm clusters.

Before you begin

You should be familiar with the Kubernetes container runtime requirements.

Configuring the container runtime cgroup driver

The Container runtimes page

explains that the systemd driver is recommended for kubeadm based setups instead

of the cgroupfs driver, because kubeadm manages the kubelet as a systemd service.

The page also provides details on how to setup a number of different container runtimes with the

systemd driver by default.

Configuring the kubelet cgroup driver

kubeadm allows you to pass a KubeletConfiguration structure during kubeadm init.

This KubeletConfiguration can include the cgroupDriver field which controls the cgroup

driver of the kubelet.

Note: In v1.22, if the user is not setting thecgroupDriverfield underKubeletConfiguration,kubeadmwill default it tosystemd.

A minimal example of configuring the field explicitly:

# kubeadm-config.yaml

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.21.0

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

Such a configuration file can then be passed to the kubeadm command:

kubeadm init --config kubeadm-config.yaml

Note:Kubeadm uses the same

KubeletConfigurationfor all nodes in the cluster. TheKubeletConfigurationis stored in a ConfigMap object under thekube-systemnamespace.Executing the sub commands

init,joinandupgradewould result in kubeadm writing theKubeletConfigurationas a file under/var/lib/kubelet/config.yamland passing it to the local node kubelet.

Using the cgroupfs driver

As this guide explains using the cgroupfs driver with kubeadm is not recommended.

To continue using cgroupfs and to prevent kubeadm upgrade from modifying the

KubeletConfiguration cgroup driver on existing setups, you must be explicit

about its value. This applies to a case where you do not wish future versions

of kubeadm to apply the systemd driver by default.

See the below section on "Modify the kubelet ConfigMap" for details on how to be explicit about the value.

If you wish to configure a container runtime to use the cgroupfs driver,

you must refer to the documentation of the container runtime of your choice.

Migrating to the systemd driver

To change the cgroup driver of an existing kubeadm cluster to systemd in-place,

a similar procedure to a kubelet upgrade is required. This must include both

steps outlined below.

Note: Alternatively, it is possible to replace the old nodes in the cluster with new ones that use thesystemddriver. This requires executing only the first step below before joining the new nodes and ensuring the workloads can safely move to the new nodes before deleting the old nodes.

Modify the kubelet ConfigMap

-

Find the kubelet ConfigMap name using

kubectl get cm -n kube-system | grep kubelet-config. -

Call

kubectl edit cm kubelet-config-x.yy -n kube-system(replacex.yywith the Kubernetes version). -

Either modify the existing

cgroupDrivervalue or add a new field that looks like this:cgroupDriver: systemdThis field must be present under the

kubelet:section of the ConfigMap.

Update the cgroup driver on all nodes

For each node in the cluster:

- Drain the node using

kubectl drain <node-name> --ignore-daemonsets - Stop the kubelet using

systemctl stop kubelet - Stop the container runtime

- Modify the container runtime cgroup driver to

systemd - Set

cgroupDriver: systemdin/var/lib/kubelet/config.yaml - Start the container runtime

- Start the kubelet using

systemctl start kubelet - Uncordon the node using

kubectl uncordon <node-name>

Execute these steps on nodes one at a time to ensure workloads have sufficient time to schedule on different nodes.

Once the process is complete ensure that all nodes and workloads are healthy.

1.3 - Upgrading kubeadm clusters

This page explains how to upgrade a Kubernetes cluster created with kubeadm from version

1.21.x to version 1.22.x, and from version

1.22.x to 1.22.y (where y > x). Skipping MINOR versions

when upgrading is unsupported.

To see information about upgrading clusters created using older versions of kubeadm, please refer to following pages instead:

- Upgrading a kubeadm cluster from 1.20 to 1.21

- Upgrading a kubeadm cluster from 1.19 to 1.20

- Upgrading a kubeadm cluster from 1.18 to 1.19

- Upgrading a kubeadm cluster from 1.17 to 1.18

The upgrade workflow at high level is the following:

- Upgrade a primary control plane node.

- Upgrade additional control plane nodes.

- Upgrade worker nodes.

Before you begin

- Make sure you read the release notes carefully.

- The cluster should use a static control plane and etcd pods or external etcd.

- Make sure to back up any important components, such as app-level state stored in a database.

kubeadm upgradedoes not touch your workloads, only components internal to Kubernetes, but backups are always a best practice. - Swap must be disabled.

Additional information

- Draining nodes before kubelet MINOR version upgrades is required. In the case of control plane nodes, they could be running CoreDNS Pods or other critical workloads.

- All containers are restarted after upgrade, because the container spec hash value is changed.

Determine which version to upgrade to

Find the latest patch release for Kubernetes 1.22 using the OS package manager:

apt update

apt-cache madison kubeadm

# find the latest 1.22 version in the list

# it should look like 1.22.x-00, where x is the latest patch

yum list --showduplicates kubeadm --disableexcludes=kubernetes

# find the latest 1.22 version in the list

# it should look like 1.22.x-0, where x is the latest patch

Upgrading control plane nodes

The upgrade procedure on control plane nodes should be executed one node at a time.

Pick a control plane node that you wish to upgrade first. It must have the /etc/kubernetes/admin.conf file.

Call "kubeadm upgrade"

For the first control plane node

- Upgrade kubeadm:

# replace x in 1.22.x-00 with the latest patch version

apt-mark unhold kubeadm && \

apt-get update && apt-get install -y kubeadm=1.22.x-00 && \

apt-mark hold kubeadm

-

# since apt-get version 1.1 you can also use the following method

apt-get update && \

apt-get install -y --allow-change-held-packages kubeadm=1.22.x-00

# replace x in 1.22.x-0 with the latest patch version

yum install -y kubeadm-1.22.x-0 --disableexcludes=kubernetes

-

Verify that the download works and has the expected version:

kubeadm version -

Verify the upgrade plan:

kubeadm upgrade planThis command checks that your cluster can be upgraded, and fetches the versions you can upgrade to. It also shows a table with the component config version states.

Note:kubeadm upgradealso automatically renews the certificates that it manages on this node. To opt-out of certificate renewal the flag--certificate-renewal=falsecan be used. For more information see the certificate management guide.

Note: Ifkubeadm upgrade planshows any component configs that require manual upgrade, users must provide a config file with replacement configs tokubeadm upgrade applyvia the--configcommand line flag. Failing to do so will causekubeadm upgrade applyto exit with an error and not perform an upgrade.

-

Choose a version to upgrade to, and run the appropriate command. For example:

# replace x with the patch version you picked for this upgrade sudo kubeadm upgrade apply v1.22.xOnce the command finishes you should see:

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.22.x". Enjoy! [upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so. -

Manually upgrade your CNI provider plugin.

Your Container Network Interface (CNI) provider may have its own upgrade instructions to follow. Check the addons page to find your CNI provider and see whether additional upgrade steps are required.

This step is not required on additional control plane nodes if the CNI provider runs as a DaemonSet.

For the other control plane nodes

Same as the first control plane node but use:

sudo kubeadm upgrade node

instead of:

sudo kubeadm upgrade apply

Also calling kubeadm upgrade plan and upgrading the CNI provider plugin is no longer needed.

Drain the node

-

Prepare the node for maintenance by marking it unschedulable and evicting the workloads:

# replace <node-to-drain> with the name of your node you are draining kubectl drain <node-to-drain> --ignore-daemonsets

Upgrade kubelet and kubectl

- Upgrade the kubelet and kubectl:

# replace x in 1.22.x-00 with the latest patch version

apt-mark unhold kubelet kubectl && \

apt-get update && apt-get install -y kubelet=1.22.x-00 kubectl=1.22.x-00 && \

apt-mark hold kubelet kubectl

-

# since apt-get version 1.1 you can also use the following method

apt-get update && \

apt-get install -y --allow-change-held-packages kubelet=1.22.x-00 kubectl=1.22.x-00

# replace x in 1.22.x-0 with the latest patch version

yum install -y kubelet-1.22.x-0 kubectl-1.22.x-0 --disableexcludes=kubernetes

-

Restart the kubelet:

sudo systemctl daemon-reload sudo systemctl restart kubelet

Uncordon the node

-

Bring the node back online by marking it schedulable:

# replace <node-to-drain> with the name of your node kubectl uncordon <node-to-drain>

Upgrade worker nodes

The upgrade procedure on worker nodes should be executed one node at a time or few nodes at a time, without compromising the minimum required capacity for running your workloads.

Upgrade kubeadm

- Upgrade kubeadm:

# replace x in 1.22.x-00 with the latest patch version

apt-mark unhold kubeadm && \

apt-get update && apt-get install -y kubeadm=1.22.x-00 && \

apt-mark hold kubeadm

-

# since apt-get version 1.1 you can also use the following method

apt-get update && \

apt-get install -y --allow-change-held-packages kubeadm=1.22.x-00

# replace x in 1.22.x-0 with the latest patch version

yum install -y kubeadm-1.22.x-0 --disableexcludes=kubernetes

Call "kubeadm upgrade"

-

For worker nodes this upgrades the local kubelet configuration:

sudo kubeadm upgrade node

Drain the node

-

Prepare the node for maintenance by marking it unschedulable and evicting the workloads:

# replace <node-to-drain> with the name of your node you are draining kubectl drain <node-to-drain> --ignore-daemonsets

Upgrade kubelet and kubectl

- Upgrade the kubelet and kubectl:

# replace x in 1.22.x-00 with the latest patch version

apt-mark unhold kubelet kubectl && \

apt-get update && apt-get install -y kubelet=1.22.x-00 kubectl=1.22.x-00 && \

apt-mark hold kubelet kubectl

-

# since apt-get version 1.1 you can also use the following method

apt-get update && \

apt-get install -y --allow-change-held-packages kubelet=1.22.x-00 kubectl=1.22.x-00

# replace x in 1.22.x-0 with the latest patch version

yum install -y kubelet-1.22.x-0 kubectl-1.22.x-0 --disableexcludes=kubernetes

-

Restart the kubelet:

sudo systemctl daemon-reload sudo systemctl restart kubelet

Uncordon the node

-

Bring the node back online by marking it schedulable:

# replace <node-to-drain> with the name of your node kubectl uncordon <node-to-drain>

Verify the status of the cluster

After the kubelet is upgraded on all nodes verify that all nodes are available again by running the following command from anywhere kubectl can access the cluster:

kubectl get nodes

The STATUS column should show Ready for all your nodes, and the version number should be updated.

Recovering from a failure state

If kubeadm upgrade fails and does not roll back, for example because of an unexpected shutdown during execution, you can run kubeadm upgrade again.

This command is idempotent and eventually makes sure that the actual state is the desired state you declare.

To recover from a bad state, you can also run kubeadm upgrade apply --force without changing the version that your cluster is running.

During upgrade kubeadm writes the following backup folders under /etc/kubernetes/tmp:

kubeadm-backup-etcd-<date>-<time>kubeadm-backup-manifests-<date>-<time>

kubeadm-backup-etcd contains a backup of the local etcd member data for this control plane Node.

In case of an etcd upgrade failure and if the automatic rollback does not work, the contents of this folder

can be manually restored in /var/lib/etcd. In case external etcd is used this backup folder will be empty.

kubeadm-backup-manifests contains a backup of the static Pod manifest files for this control plane Node.

In case of a upgrade failure and if the automatic rollback does not work, the contents of this folder can be

manually restored in /etc/kubernetes/manifests. If for some reason there is no difference between a pre-upgrade

and post-upgrade manifest file for a certain component, a backup file for it will not be written.

How it works

kubeadm upgrade apply does the following:

- Checks that your cluster is in an upgradeable state:

- The API server is reachable

- All nodes are in the

Readystate - The control plane is healthy

- Enforces the version skew policies.

- Makes sure the control plane images are available or available to pull to the machine.

- Generates replacements and/or uses user supplied overwrites if component configs require version upgrades.

- Upgrades the control plane components or rollbacks if any of them fails to come up.

- Applies the new

CoreDNSandkube-proxymanifests and makes sure that all necessary RBAC rules are created. - Creates new certificate and key files of the API server and backs up old files if they're about to expire in 180 days.

kubeadm upgrade node does the following on additional control plane nodes:

- Fetches the kubeadm

ClusterConfigurationfrom the cluster. - Optionally backups the kube-apiserver certificate.

- Upgrades the static Pod manifests for the control plane components.

- Upgrades the kubelet configuration for this node.

kubeadm upgrade node does the following on worker nodes:

- Fetches the kubeadm

ClusterConfigurationfrom the cluster. - Upgrades the kubelet configuration for this node.

1.4 - Adding Windows nodes

Kubernetes v1.18 [beta]

You can use Kubernetes to run a mixture of Linux and Windows nodes, so you can mix Pods that run on Linux on with Pods that run on Windows. This page shows how to register Windows nodes to your cluster.

Before you begin

Your Kubernetes server must be at or later than version 1.17. To check the version, enterkubectl version.

-

Obtain a Windows Server 2019 license (or higher) in order to configure the Windows node that hosts Windows containers. If you are using VXLAN/Overlay networking you must have also have KB4489899 installed.

-

A Linux-based Kubernetes kubeadm cluster in which you have access to the control plane (see Creating a single control-plane cluster with kubeadm).

Objectives

- Register a Windows node to the cluster

- Configure networking so Pods and Services on Linux and Windows can communicate with each other

Getting Started: Adding a Windows Node to Your Cluster

Networking Configuration

Once you have a Linux-based Kubernetes control-plane node you are ready to choose a networking solution. This guide illustrates using Flannel in VXLAN mode for simplicity.

Configuring Flannel

-

Prepare Kubernetes control plane for Flannel

Some minor preparation is recommended on the Kubernetes control plane in our cluster. It is recommended to enable bridged IPv4 traffic to iptables chains when using Flannel. The following command must be run on all Linux nodes:

sudo sysctl net.bridge.bridge-nf-call-iptables=1 -

Download & configure Flannel for Linux

Download the most recent Flannel manifest:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlModify the

net-conf.jsonsection of the flannel manifest in order to set the VNI to 4096 and the Port to 4789. It should look as follows:net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan", "VNI": 4096, "Port": 4789 } }Note: The VNI must be set to 4096 and port 4789 for Flannel on Linux to interoperate with Flannel on Windows. See the VXLAN documentation. for an explanation of these fields.Note: To use L2Bridge/Host-gateway mode instead change the value ofTypeto"host-gw"and omitVNIandPort. -

Apply the Flannel manifest and validate

Let's apply the Flannel configuration:

kubectl apply -f kube-flannel.ymlAfter a few minutes, you should see all the pods as running if the Flannel pod network was deployed.

kubectl get pods -n kube-systemThe output should include the Linux flannel DaemonSet as running:

NAMESPACE NAME READY STATUS RESTARTS AGE ... kube-system kube-flannel-ds-54954 1/1 Running 0 1m -

Add Windows Flannel and kube-proxy DaemonSets

Now you can add Windows-compatible versions of Flannel and kube-proxy. In order to ensure that you get a compatible version of kube-proxy, you'll need to substitute the tag of the image. The following example shows usage for Kubernetes v1.22.0, but you should adjust the version for your own deployment.

curl -L https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/kube-proxy.yml | sed 's/VERSION/v1.22.0/g' | kubectl apply -f - kubectl apply -f https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/flannel-overlay.ymlNote: If you're using host-gateway use https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/flannel-host-gw.yml insteadNote:If you're using a different interface rather than Ethernet (i.e. "Ethernet0 2") on the Windows nodes, you have to modify the line:

wins cli process run --path /k/flannel/setup.exe --args "--mode=overlay --interface=Ethernet"in the

flannel-host-gw.ymlorflannel-overlay.ymlfile and specify your interface accordingly.# Example curl -L https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/flannel-overlay.yml | sed 's/Ethernet/Ethernet0 2/g' | kubectl apply -f -

Joining a Windows worker node

Note: All code snippets in Windows sections are to be run in a PowerShell environment with elevated permissions (Administrator) on the Windows worker node.

Install Docker EE

Install the Containers feature

Install-WindowsFeature -Name containers

Install Docker Instructions to do so are available at Install Docker Engine - Enterprise on Windows Servers.

Install wins, kubelet, and kubeadm

curl.exe -LO https://raw.githubusercontent.com/kubernetes-sigs/sig-windows-tools/master/kubeadm/scripts/PrepareNode.ps1

.\PrepareNode.ps1 -KubernetesVersion v1.22.0

Run kubeadm to join the node

Use the command that was given to you when you ran kubeadm init on a control plane host.

If you no longer have this command, or the token has expired, you can run kubeadm token create --print-join-command

(on a control plane host) to generate a new token and join command.

Install containerD

curl.exe -LO https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/Install-Containerd.ps1

.\Install-Containerd.ps1

Note:To install a specific version of containerD specify the version with -ContainerDVersion.

# Example .\Install-Containerd.ps1 -ContainerDVersion 1.4.1

Note:If you're using a different interface rather than Ethernet (i.e. "Ethernet0 2") on the Windows nodes, specify the name with

-netAdapterName.# Example .\Install-Containerd.ps1 -netAdapterName "Ethernet0 2"

Install wins, kubelet, and kubeadm

curl.exe -LO https://raw.githubusercontent.com/kubernetes-sigs/sig-windows-tools/master/kubeadm/scripts/PrepareNode.ps1

.\PrepareNode.ps1 -KubernetesVersion v1.22.0 -ContainerRuntime containerD

Run kubeadm to join the node

Use the command that was given to you when you ran kubeadm init on a control plane host.

If you no longer have this command, or the token has expired, you can run kubeadm token create --print-join-command

(on a control plane host) to generate a new token and join command.

Note: If using CRI-containerD add--cri-socket "npipe:////./pipe/containerd-containerd"to the kubeadm call

Verifying your installation

You should now be able to view the Windows node in your cluster by running:

kubectl get nodes -o wide

If your new node is in the NotReady state it is likely because the flannel image is still downloading.

You can check the progress as before by checking on the flannel pods in the kube-system namespace:

kubectl -n kube-system get pods -l app=flannel

Once the flannel Pod is running, your node should enter the Ready state and then be available to handle workloads.

What's next

1.5 - Upgrading Windows nodes

Kubernetes v1.18 [beta]

This page explains how to upgrade a Windows node created with kubeadm.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. If you do not already have a cluster, you can create one by using minikube or you can use one of these Kubernetes playgrounds:

Your Kubernetes server must be at or later than version 1.17. To check the version, enterkubectl version.

- Familiarize yourself with the process for upgrading the rest of your kubeadm cluster. You will want to upgrade the control plane nodes before upgrading your Windows nodes.

Upgrading worker nodes

Upgrade kubeadm

-

From the Windows node, upgrade kubeadm:

# replace v1.22.0 with your desired version curl.exe -Lo C:\k\kubeadm.exe https://dl.k8s.io//bin/windows/amd64/kubeadm.exe

Drain the node

-

From a machine with access to the Kubernetes API, prepare the node for maintenance by marking it unschedulable and evicting the workloads:

# replace <node-to-drain> with the name of your node you are draining kubectl drain <node-to-drain> --ignore-daemonsetsYou should see output similar to this:

node/ip-172-31-85-18 cordoned node/ip-172-31-85-18 drained

Upgrade the kubelet configuration

-

From the Windows node, call the following command to sync new kubelet configuration:

kubeadm upgrade node

Upgrade kubelet

-

From the Windows node, upgrade and restart the kubelet:

stop-service kubelet curl.exe -Lo C:\k\kubelet.exe https://dl.k8s.io//bin/windows/amd64/kubelet.exe restart-service kubelet

Uncordon the node

-

From a machine with access to the Kubernetes API, bring the node back online by marking it schedulable:

# replace <node-to-drain> with the name of your node kubectl uncordon <node-to-drain>

Upgrade kube-proxy

-

From a machine with access to the Kubernetes API, run the following, again replacing v1.22.0 with your desired version:

curl -L https://github.com/kubernetes-sigs/sig-windows-tools/releases/latest/download/kube-proxy.yml | sed 's/VERSION/v1.22.0/g' | kubectl apply -f -

2 - Migrating from dockershim

This section presents information you need to know when migrating from dockershim to other container runtimes.

Since the announcement of dockershim deprecation in Kubernetes 1.20, there were questions on how this will affect various workloads and Kubernetes installations. You can find this blog post useful to understand the problem better: Dockershim Deprecation FAQ

It is recommended to migrate from dockershim to alternative container runtimes. Check out container runtimes section to know your options. Make sure to report issues you encountered with the migration. So the issue can be fixed in a timely manner and your cluster would be ready for dockershim removal.

2.1 - Check whether Dockershim deprecation affects you

The dockershim component of Kubernetes allows to use Docker as a Kubernetes's

container runtime.

Kubernetes' built-in dockershim component was deprecated in release v1.20.

This page explains how your cluster could be using Docker as a container runtime,

provides details on the role that dockershim plays when in use, and shows steps

you can take to check whether any workloads could be affected by dockershim deprecation.

Finding if your app has a dependencies on Docker

If you are using Docker for building your application containers, you can still run these containers on any container runtime. This use of Docker does not count as a dependency on Docker as a container runtime.

When alternative container runtime is used, executing Docker commands may either not work or yield unexpected output. This is how you can find whether you have a dependency on Docker:

- Make sure no privileged Pods execute Docker commands.

- Check that scripts and apps running on nodes outside of Kubernetes

infrastructure do not execute Docker commands. It might be:

- SSH to nodes to troubleshoot;

- Node startup scripts;

- Monitoring and security agents installed on nodes directly.

- Third-party tools that perform above mentioned privileged operations. See Migrating telemetry and security agents from dockershim for more information.

- Make sure there is no indirect dependencies on dockershim behavior. This is an edge case and unlikely to affect your application. Some tooling may be configured to react to Docker-specific behaviors, for example, raise alert on specific metrics or search for a specific log message as part of troubleshooting instructions. If you have such tooling configured, test the behavior on test cluster before migration.

Dependency on Docker explained

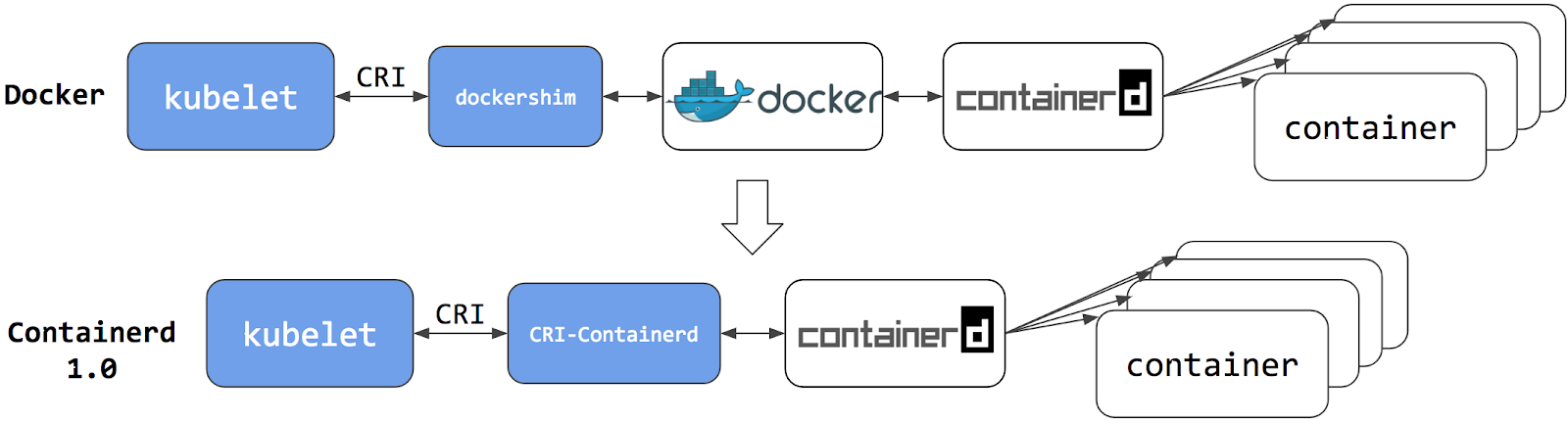

A container runtime is software that can execute the containers that make up a Kubernetes pod. Kubernetes is responsible for orchestration and scheduling of Pods; on each node, the kubelet uses the container runtime interface as an abstraction so that you can use any compatible container runtime.

In its earliest releases, Kubernetes offered compatibility with one container runtime: Docker.

Later in the Kubernetes project's history, cluster operators wanted to adopt additional container runtimes.

The CRI was designed to allow this kind of flexibility - and the kubelet began supporting CRI. However,

because Docker existed before the CRI specification was invented, the Kubernetes project created an

adapter component, dockershim. The dockershim adapter allows the kubelet to interact with Docker as

if Docker were a CRI compatible runtime.

You can read about it in Kubernetes Containerd integration goes GA blog post.

Switching to Containerd as a container runtime eliminates the middleman. All the same containers can be run by container runtimes like Containerd as before. But now, since containers schedule directly with the container runtime, they are not visible to Docker. So any Docker tooling or fancy UI you might have used before to check on these containers is no longer available.

You cannot get container information using docker ps or docker inspect

commands. As you cannot list containers, you cannot get logs, stop containers,

or execute something inside container using docker exec.

Note: If you're running workloads via Kubernetes, the best way to stop a container is through the Kubernetes API rather than directly through the container runtime (this advice applies for all container runtimes, not only Docker).

You can still pull images or build them using docker build command. But images

built or pulled by Docker would not be visible to container runtime and

Kubernetes. They needed to be pushed to some registry to allow them to be used

by Kubernetes.

2.2 - Migrating telemetry and security agents from dockershim

With Kubernetes 1.20 dockershim was deprecated. From the Dockershim Deprecation FAQ you might already know that most apps do not have a direct dependency on runtime hosting containers. However, there are still a lot of telemetry and security agents that has a dependency on docker to collect containers metadata, logs and metrics. This document aggregates information on how to detect these dependencies and links on how to migrate these agents to use generic tools or alternative runtimes.

Telemetry and security agents

There are a few ways agents may run on Kubernetes cluster. Agents may run on nodes directly or as DaemonSets.

Why do telemetry agents rely on Docker?

Historically, Kubernetes was built on top of Docker. Kubernetes is managing networking and scheduling, Docker was placing and operating containers on a node. So you can get scheduling-related metadata like a pod name from Kubernetes and containers state information from Docker. Over time more runtimes were created to manage containers. Also there are projects and Kubernetes features that generalize container status information extraction across many runtimes.

Some agents are tied specifically to the Docker tool. The agents may run

commands like docker ps

or docker top to list

containers and processes or docker logs

to subscribe on docker logs. With the deprecating of Docker as a container runtime,

these commands will not work any longer.

Identify DaemonSets that depend on Docker

If a pod wants to make calls to the dockerd running on the node, the pod must either:

- mount the filesystem containing the Docker daemon's privileged socket, as a volume; or

- mount the specific path of the Docker daemon's privileged socket directly, also as a volume.

For example: on COS images, Docker exposes its Unix domain socket at

/var/run/docker.sock This means that the pod spec will include a

hostPath volume mount of /var/run/docker.sock.

Here's a sample shell script to find Pods that have a mount directly mapping the

Docker socket. This script outputs the namespace and name of the pod. You can

remove the grep /var/run/docker.sock to review other mounts.

kubectl get pods --all-namespaces \

-o=jsonpath='{range .items[*]}{"\n"}{.metadata.namespace}{":\t"}{.metadata.name}{":\t"}{range .spec.volumes[*]}{.hostPath.path}{", "}{end}{end}' \

| sort \

| grep '/var/run/docker.sock'

Note: There are alternative ways for a pod to access Docker on the host. For instance, the parent directory/var/runmay be mounted instead of the full path (like in this example). The script above only detects the most common uses.

Detecting Docker dependency from node agents

In case your cluster nodes are customized and install additional security and telemetry agents on the node, make sure to check with the vendor of the agent whether it has dependency on Docker.

Telemetry and security agent vendors

We keep the work in progress version of migration instructions for various telemetry and security agent vendors in Google doc. Please contact the vendor to get up to date instructions for migrating from dockershim.

3 - Certificates

When using client certificate authentication, you can generate certificates

manually through easyrsa, openssl or cfssl.

easyrsa

easyrsa can manually generate certificates for your cluster.

-

Download, unpack, and initialize the patched version of easyrsa3.

curl -LO https://storage.googleapis.com/kubernetes-release/easy-rsa/easy-rsa.tar.gz tar xzf easy-rsa.tar.gz cd easy-rsa-master/easyrsa3 ./easyrsa init-pki -

Generate a new certificate authority (CA).

--batchsets automatic mode;--req-cnspecifies the Common Name (CN) for the CA's new root certificate../easyrsa --batch "--req-cn=${MASTER_IP}@`date +%s`" build-ca nopass -

Generate server certificate and key. The argument

--subject-alt-namesets the possible IPs and DNS names the API server will be accessed with. TheMASTER_CLUSTER_IPis usually the first IP from the service CIDR that is specified as the--service-cluster-ip-rangeargument for both the API server and the controller manager component. The argument--daysis used to set the number of days after which the certificate expires. The sample below also assumes that you are usingcluster.localas the default DNS domain name../easyrsa --subject-alt-name="IP:${MASTER_IP},"\ "IP:${MASTER_CLUSTER_IP},"\ "DNS:kubernetes,"\ "DNS:kubernetes.default,"\ "DNS:kubernetes.default.svc,"\ "DNS:kubernetes.default.svc.cluster,"\ "DNS:kubernetes.default.svc.cluster.local" \ --days=10000 \ build-server-full server nopass -

Copy

pki/ca.crt,pki/issued/server.crt, andpki/private/server.keyto your directory. -

Fill in and add the following parameters into the API server start parameters:

--client-ca-file=/yourdirectory/ca.crt --tls-cert-file=/yourdirectory/server.crt --tls-private-key-file=/yourdirectory/server.key

openssl

openssl can manually generate certificates for your cluster.

-

Generate a ca.key with 2048bit:

openssl genrsa -out ca.key 2048 -

According to the ca.key generate a ca.crt (use -days to set the certificate effective time):

openssl req -x509 -new -nodes -key ca.key -subj "/CN=${MASTER_IP}" -days 10000 -out ca.crt -

Generate a server.key with 2048bit:

openssl genrsa -out server.key 2048 -

Create a config file for generating a Certificate Signing Request (CSR). Be sure to substitute the values marked with angle brackets (e.g.

<MASTER_IP>) with real values before saving this to a file (e.g.csr.conf). Note that the value forMASTER_CLUSTER_IPis the service cluster IP for the API server as described in previous subsection. The sample below also assumes that you are usingcluster.localas the default DNS domain name.[ req ] default_bits = 2048 prompt = no default_md = sha256 req_extensions = req_ext distinguished_name = dn [ dn ] C = <country> ST = <state> L = <city> O = <organization> OU = <organization unit> CN = <MASTER_IP> [ req_ext ] subjectAltName = @alt_names [ alt_names ] DNS.1 = kubernetes DNS.2 = kubernetes.default DNS.3 = kubernetes.default.svc DNS.4 = kubernetes.default.svc.cluster DNS.5 = kubernetes.default.svc.cluster.local IP.1 = <MASTER_IP> IP.2 = <MASTER_CLUSTER_IP> [ v3_ext ] authorityKeyIdentifier=keyid,issuer:always basicConstraints=CA:FALSE keyUsage=keyEncipherment,dataEncipherment extendedKeyUsage=serverAuth,clientAuth subjectAltName=@alt_names -

Generate the certificate signing request based on the config file:

openssl req -new -key server.key -out server.csr -config csr.conf -

Generate the server certificate using the ca.key, ca.crt and server.csr:

openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key \ -CAcreateserial -out server.crt -days 10000 \ -extensions v3_ext -extfile csr.conf -

View the certificate signing request:

openssl req -noout -text -in ./server.csr -

View the certificate:

openssl x509 -noout -text -in ./server.crt

Finally, add the same parameters into the API server start parameters.

cfssl

cfssl is another tool for certificate generation.

-

Download, unpack and prepare the command line tools as shown below. Note that you may need to adapt the sample commands based on the hardware architecture and cfssl version you are using.

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64 -o cfssl chmod +x cfssl curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64 -o cfssljson chmod +x cfssljson curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64 -o cfssl-certinfo chmod +x cfssl-certinfo -

Create a directory to hold the artifacts and initialize cfssl:

mkdir cert cd cert ../cfssl print-defaults config > config.json ../cfssl print-defaults csr > csr.json -

Create a JSON config file for generating the CA file, for example,

ca-config.json:{ "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "8760h" } } } } -

Create a JSON config file for CA certificate signing request (CSR), for example,

ca-csr.json. Be sure to replace the values marked with angle brackets with real values you want to use.{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names":[{ "C": "<country>", "ST": "<state>", "L": "<city>", "O": "<organization>", "OU": "<organization unit>" }] } -

Generate CA key (

ca-key.pem) and certificate (ca.pem):../cfssl gencert -initca ca-csr.json | ../cfssljson -bare ca -

Create a JSON config file for generating keys and certificates for the API server, for example,

server-csr.json. Be sure to replace the values in angle brackets with real values you want to use. TheMASTER_CLUSTER_IPis the service cluster IP for the API server as described in previous subsection. The sample below also assumes that you are usingcluster.localas the default DNS domain name.{ "CN": "kubernetes", "hosts": [ "127.0.0.1", "<MASTER_IP>", "<MASTER_CLUSTER_IP>", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [{ "C": "<country>", "ST": "<state>", "L": "<city>", "O": "<organization>", "OU": "<organization unit>" }] } -

Generate the key and certificate for the API server, which are by default saved into file

server-key.pemandserver.pemrespectively:../cfssl gencert -ca=ca.pem -ca-key=ca-key.pem \ --config=ca-config.json -profile=kubernetes \ server-csr.json | ../cfssljson -bare server

Distributing Self-Signed CA Certificate

A client node may refuse to recognize a self-signed CA certificate as valid. For a non-production deployment, or for a deployment that runs behind a company firewall, you can distribute a self-signed CA certificate to all clients and refresh the local list for valid certificates.

On each client, perform the following operations:

sudo cp ca.crt /usr/local/share/ca-certificates/kubernetes.crt

sudo update-ca-certificates

Updating certificates in /etc/ssl/certs...

1 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d....

done.

Certificates API

You can use the certificates.k8s.io API to provision

x509 certificates to use for authentication as documented

here.

4 - Manage Memory, CPU, and API Resources

4.1 - Configure Default Memory Requests and Limits for a Namespace

This page shows how to configure default memory requests and limits for a namespace. If a Container is created in a namespace that has a default memory limit, and the Container does not specify its own memory limit, then the Container is assigned the default memory limit. Kubernetes assigns a default memory request under certain conditions that are explained later in this topic.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. If you do not already have a cluster, you can create one by using minikube or you can use one of these Kubernetes playgrounds:

To check the version, enterkubectl version.

Each node in your cluster must have at least 2 GiB of memory.

Create a namespace

Create a namespace so that the resources you create in this exercise are isolated from the rest of your cluster.

kubectl create namespace default-mem-example

Create a LimitRange and a Pod

Here's the configuration file for a LimitRange object. The configuration specifies a default memory request and a default memory limit.

apiVersion: v1

kind: LimitRange

metadata:

name: mem-limit-range

spec:

limits:

- default:

memory: 512Mi

defaultRequest:

memory: 256Mi

type: Container

Create the LimitRange in the default-mem-example namespace:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-defaults.yaml --namespace=default-mem-example

Now if a Container is created in the default-mem-example namespace, and the Container does not specify its own values for memory request and memory limit, the Container is given a default memory request of 256 MiB and a default memory limit of 512 MiB.

Here's the configuration file for a Pod that has one Container. The Container does not specify a memory request and limit.

apiVersion: v1

kind: Pod

metadata:

name: default-mem-demo

spec:

containers:

- name: default-mem-demo-ctr

image: nginx

Create the Pod.

kubectl apply -f https://k8s.io/examples/admin/resource/memory-defaults-pod.yaml --namespace=default-mem-example

View detailed information about the Pod:

kubectl get pod default-mem-demo --output=yaml --namespace=default-mem-example

The output shows that the Pod's Container has a memory request of 256 MiB and a memory limit of 512 MiB. These are the default values specified by the LimitRange.

containers:

- image: nginx

imagePullPolicy: Always

name: default-mem-demo-ctr

resources:

limits:

memory: 512Mi

requests:

memory: 256Mi

Delete your Pod:

kubectl delete pod default-mem-demo --namespace=default-mem-example

What if you specify a Container's limit, but not its request?

Here's the configuration file for a Pod that has one Container. The Container specifies a memory limit, but not a request:

apiVersion: v1

kind: Pod

metadata:

name: default-mem-demo-2

spec:

containers:

- name: default-mem-demo-2-ctr

image: nginx

resources:

limits:

memory: "1Gi"

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-defaults-pod-2.yaml --namespace=default-mem-example

View detailed information about the Pod:

kubectl get pod default-mem-demo-2 --output=yaml --namespace=default-mem-example

The output shows that the Container's memory request is set to match its memory limit. Notice that the Container was not assigned the default memory request value of 256Mi.

resources:

limits:

memory: 1Gi

requests:

memory: 1Gi

What if you specify a Container's request, but not its limit?

Here's the configuration file for a Pod that has one Container. The Container specifies a memory request, but not a limit:

apiVersion: v1

kind: Pod

metadata:

name: default-mem-demo-3

spec:

containers:

- name: default-mem-demo-3-ctr

image: nginx

resources:

requests:

memory: "128Mi"

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-defaults-pod-3.yaml --namespace=default-mem-example

View the Pod's specification:

kubectl get pod default-mem-demo-3 --output=yaml --namespace=default-mem-example

The output shows that the Container's memory request is set to the value specified in the Container's configuration file. The Container's memory limit is set to 512Mi, which is the default memory limit for the namespace.

resources:

limits:

memory: 512Mi

requests:

memory: 128Mi

Motivation for default memory limits and requests

If your namespace has a resource quota, it is helpful to have a default value in place for memory limit. Here are two of the restrictions that a resource quota imposes on a namespace:

- Every Container that runs in the namespace must have its own memory limit.

- The total amount of memory used by all Containers in the namespace must not exceed a specified limit.

If a Container does not specify its own memory limit, it is given the default limit, and then it can be allowed to run in a namespace that is restricted by a quota.

Clean up

Delete your namespace:

kubectl delete namespace default-mem-example

What's next

For cluster administrators

-

Configure Minimum and Maximum Memory Constraints for a Namespace

-

Configure Minimum and Maximum CPU Constraints for a Namespace

For app developers

4.2 - Configure Default CPU Requests and Limits for a Namespace

This page shows how to configure default CPU requests and limits for a namespace. A Kubernetes cluster can be divided into namespaces. If a Container is created in a namespace that has a default CPU limit, and the Container does not specify its own CPU limit, then the Container is assigned the default CPU limit. Kubernetes assigns a default CPU request under certain conditions that are explained later in this topic.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. If you do not already have a cluster, you can create one by using minikube or you can use one of these Kubernetes playgrounds:

To check the version, enterkubectl version.

Create a namespace

Create a namespace so that the resources you create in this exercise are isolated from the rest of your cluster.

kubectl create namespace default-cpu-example

Create a LimitRange and a Pod

Here's the configuration file for a LimitRange object. The configuration specifies a default CPU request and a default CPU limit.

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-limit-range

spec:

limits:

- default:

cpu: 1

defaultRequest:

cpu: 0.5

type: Container

Create the LimitRange in the default-cpu-example namespace:

kubectl apply -f https://k8s.io/examples/admin/resource/cpu-defaults.yaml --namespace=default-cpu-example

Now if a Container is created in the default-cpu-example namespace, and the Container does not specify its own values for CPU request and CPU limit, the Container is given a default CPU request of 0.5 and a default CPU limit of 1.

Here's the configuration file for a Pod that has one Container. The Container does not specify a CPU request and limit.

apiVersion: v1

kind: Pod

metadata:

name: default-cpu-demo

spec:

containers:

- name: default-cpu-demo-ctr

image: nginx

Create the Pod.

kubectl apply -f https://k8s.io/examples/admin/resource/cpu-defaults-pod.yaml --namespace=default-cpu-example

View the Pod's specification:

kubectl get pod default-cpu-demo --output=yaml --namespace=default-cpu-example

The output shows that the Pod's Container has a CPU request of 500 millicpus and a CPU limit of 1 cpu. These are the default values specified by the LimitRange.

containers:

- image: nginx

imagePullPolicy: Always

name: default-cpu-demo-ctr

resources:

limits:

cpu: "1"

requests:

cpu: 500m

What if you specify a Container's limit, but not its request?

Here's the configuration file for a Pod that has one Container. The Container specifies a CPU limit, but not a request:

apiVersion: v1

kind: Pod

metadata:

name: default-cpu-demo-2

spec:

containers:

- name: default-cpu-demo-2-ctr

image: nginx

resources:

limits:

cpu: "1"

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/cpu-defaults-pod-2.yaml --namespace=default-cpu-example

View the Pod specification:

kubectl get pod default-cpu-demo-2 --output=yaml --namespace=default-cpu-example

The output shows that the Container's CPU request is set to match its CPU limit. Notice that the Container was not assigned the default CPU request value of 0.5 cpu.

resources:

limits:

cpu: "1"

requests:

cpu: "1"

What if you specify a Container's request, but not its limit?

Here's the configuration file for a Pod that has one Container. The Container specifies a CPU request, but not a limit:

apiVersion: v1

kind: Pod

metadata:

name: default-cpu-demo-3

spec:

containers:

- name: default-cpu-demo-3-ctr

image: nginx

resources:

requests:

cpu: "0.75"

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/cpu-defaults-pod-3.yaml --namespace=default-cpu-example

View the Pod specification:

kubectl get pod default-cpu-demo-3 --output=yaml --namespace=default-cpu-example

The output shows that the Container's CPU request is set to the value specified in the Container's configuration file. The Container's CPU limit is set to 1 cpu, which is the default CPU limit for the namespace.

resources:

limits:

cpu: "1"

requests:

cpu: 750m

Motivation for default CPU limits and requests

If your namespace has a resource quota, it is helpful to have a default value in place for CPU limit. Here are two of the restrictions that a resource quota imposes on a namespace:

- Every Container that runs in the namespace must have its own CPU limit.

- The total amount of CPU used by all Containers in the namespace must not exceed a specified limit.

If a Container does not specify its own CPU limit, it is given the default limit, and then it can be allowed to run in a namespace that is restricted by a quota.

Clean up

Delete your namespace:

kubectl delete namespace default-cpu-example

What's next

For cluster administrators

-

Configure Default Memory Requests and Limits for a Namespace

-

Configure Minimum and Maximum Memory Constraints for a Namespace

-

Configure Minimum and Maximum CPU Constraints for a Namespace

For app developers

4.3 - Configure Minimum and Maximum Memory Constraints for a Namespace

This page shows how to set minimum and maximum values for memory used by Containers running in a namespace. You specify minimum and maximum memory values in a LimitRange object. If a Pod does not meet the constraints imposed by the LimitRange, it cannot be created in the namespace.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. If you do not already have a cluster, you can create one by using minikube or you can use one of these Kubernetes playgrounds:

To check the version, enterkubectl version.

Each node in your cluster must have at least 1 GiB of memory.

Create a namespace

Create a namespace so that the resources you create in this exercise are isolated from the rest of your cluster.

kubectl create namespace constraints-mem-example

Create a LimitRange and a Pod

Here's the configuration file for a LimitRange:

apiVersion: v1

kind: LimitRange

metadata:

name: mem-min-max-demo-lr

spec:

limits:

- max:

memory: 1Gi

min:

memory: 500Mi

type: Container

Create the LimitRange:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-constraints.yaml --namespace=constraints-mem-example

View detailed information about the LimitRange:

kubectl get limitrange mem-min-max-demo-lr --namespace=constraints-mem-example --output=yaml

The output shows the minimum and maximum memory constraints as expected. But notice that even though you didn't specify default values in the configuration file for the LimitRange, they were created automatically.

limits:

- default:

memory: 1Gi

defaultRequest:

memory: 1Gi

max:

memory: 1Gi

min:

memory: 500Mi

type: Container

Now whenever a Container is created in the constraints-mem-example namespace, Kubernetes performs these steps:

-

If the Container does not specify its own memory request and limit, assign the default memory request and limit to the Container.

-

Verify that the Container has a memory request that is greater than or equal to 500 MiB.

-

Verify that the Container has a memory limit that is less than or equal to 1 GiB.

Here's the configuration file for a Pod that has one Container. The Container manifest specifies a memory request of 600 MiB and a memory limit of 800 MiB. These satisfy the minimum and maximum memory constraints imposed by the LimitRange.

apiVersion: v1

kind: Pod

metadata:

name: constraints-mem-demo

spec:

containers:

- name: constraints-mem-demo-ctr

image: nginx

resources:

limits:

memory: "800Mi"

requests:

memory: "600Mi"

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-constraints-pod.yaml --namespace=constraints-mem-example

Verify that the Pod's Container is running:

kubectl get pod constraints-mem-demo --namespace=constraints-mem-example

View detailed information about the Pod:

kubectl get pod constraints-mem-demo --output=yaml --namespace=constraints-mem-example

The output shows that the Container has a memory request of 600 MiB and a memory limit of 800 MiB. These satisfy the constraints imposed by the LimitRange.

resources:

limits:

memory: 800Mi

requests:

memory: 600Mi

Delete your Pod:

kubectl delete pod constraints-mem-demo --namespace=constraints-mem-example

Attempt to create a Pod that exceeds the maximum memory constraint

Here's the configuration file for a Pod that has one Container. The Container specifies a memory request of 800 MiB and a memory limit of 1.5 GiB.

apiVersion: v1

kind: Pod

metadata:

name: constraints-mem-demo-2

spec:

containers:

- name: constraints-mem-demo-2-ctr

image: nginx

resources:

limits:

memory: "1.5Gi"

requests:

memory: "800Mi"

Attempt to create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-constraints-pod-2.yaml --namespace=constraints-mem-example

The output shows that the Pod does not get created, because the Container specifies a memory limit that is too large:

Error from server (Forbidden): error when creating "examples/admin/resource/memory-constraints-pod-2.yaml":

pods "constraints-mem-demo-2" is forbidden: maximum memory usage per Container is 1Gi, but limit is 1536Mi.

Attempt to create a Pod that does not meet the minimum memory request

Here's the configuration file for a Pod that has one Container. The Container specifies a memory request of 100 MiB and a memory limit of 800 MiB.

apiVersion: v1

kind: Pod

metadata:

name: constraints-mem-demo-3

spec:

containers:

- name: constraints-mem-demo-3-ctr

image: nginx

resources:

limits:

memory: "800Mi"

requests:

memory: "100Mi"

Attempt to create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-constraints-pod-3.yaml --namespace=constraints-mem-example

The output shows that the Pod does not get created, because the Container specifies a memory request that is too small:

Error from server (Forbidden): error when creating "examples/admin/resource/memory-constraints-pod-3.yaml":

pods "constraints-mem-demo-3" is forbidden: minimum memory usage per Container is 500Mi, but request is 100Mi.

Create a Pod that does not specify any memory request or limit

Here's the configuration file for a Pod that has one Container. The Container does not specify a memory request, and it does not specify a memory limit.

apiVersion: v1

kind: Pod

metadata:

name: constraints-mem-demo-4

spec:

containers:

- name: constraints-mem-demo-4-ctr

image: nginx

Create the Pod:

kubectl apply -f https://k8s.io/examples/admin/resource/memory-constraints-pod-4.yaml --namespace=constraints-mem-example

View detailed information about the Pod:

kubectl get pod constraints-mem-demo-4 --namespace=constraints-mem-example --output=yaml

The output shows that the Pod's Container has a memory request of 1 GiB and a memory limit of 1 GiB. How did the Container get those values?

resources:

limits:

memory: 1Gi

requests:

memory: 1Gi

Because your Container did not specify its own memory request and limit, it was given the default memory request and limit from the LimitRange.

At this point, your Container might be running or it might not be running. Recall that a prerequisite for this task is that your Nodes have at least 1 GiB of memory. If each of your Nodes has only 1 GiB of memory, then there is not enough allocatable memory on any Node to accommodate a memory request of 1 GiB. If you happen to be using Nodes with 2 GiB of memory, then you probably have enough space to accommodate the 1 GiB request.

Delete your Pod:

kubectl delete pod constraints-mem-demo-4 --namespace=constraints-mem-example

Enforcement of minimum and maximum memory constraints

The maximum and minimum memory constraints imposed on a namespace by a LimitRange are enforced only when a Pod is created or updated. If you change the LimitRange, it does not affect Pods that were created previously.

Motivation for minimum and maximum memory constraints

As a cluster administrator, you might want to impose restrictions on the amount of memory that Pods can use. For example:

-

Each Node in a cluster has 2 GB of memory. You do not want to accept any Pod that requests more than 2 GB of memory, because no Node in the cluster can support the request.

-

A cluster is shared by your production and development departments. You want to allow production workloads to consume up to 8 GB of memory, but you want development workloads to be limited to 512 MB. You create separate namespaces for production and development, and you apply memory constraints to each namespace.

Clean up

Delete your namespace:

kubectl delete namespace constraints-mem-example

What's next

For cluster administrators

-

Configure Default Memory Requests and Limits for a Namespace

-

Configure Minimum and Maximum CPU Constraints for a Namespace

For app developers

4.4 - Configure Minimum and Maximum CPU Constraints for a Namespace

This page shows how to set minimum and maximum values for the CPU resources used by Containers and Pods in a namespace. You specify minimum and maximum CPU values in a LimitRange object. If a Pod does not meet the constraints imposed by the LimitRange, it cannot be created in the namespace.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. It is recommended to run this tutorial on a cluster with at least two nodes that are not acting as control plane hosts. If you do not already have a cluster, you can create one by using minikube or you can use one of these Kubernetes playgrounds:

To check the version, enterkubectl version.

Your cluster must have at least 1 CPU available for use to run the task examples.

Create a namespace

Create a namespace so that the resources you create in this exercise are isolated from the rest of your cluster.

kubectl create namespace constraints-cpu-example

Create a LimitRange and a Pod

Here's the configuration file for a LimitRange:

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-min-max-demo-lr

spec:

limits:

- max:

cpu: "800m"

min:

cpu: "200m"

type: Container

Create the LimitRange:

kubectl apply -f https://k8s.io/examples/admin/resource/cpu-constraints.yaml --namespace=constraints-cpu-example

View detailed information about the LimitRange:

kubectl get limitrange cpu-min-max-demo-lr --output=yaml --namespace=constraints-cpu-example

The output shows the minimum and maximum CPU constraints as expected. But notice that even though you didn't specify default values in the configuration file for the LimitRange, they were created automatically.

limits:

- default:

cpu: 800m

defaultRequest:

cpu: 800m

max:

cpu: 800m

min:

cpu: 200m

type: Container

Now whenever a Container is created in the constraints-cpu-example namespace, Kubernetes performs these steps:

-

If the Container does not specify its own CPU request and limit, assign the default CPU request and limit to the Container.

-

Verify that the Container specifies a CPU request that is greater than or equal to 200 millicpu.

-

Verify that the Container specifies a CPU limit that is less than or equal to 800 millicpu.

Note: When creating aLimitRangeobject, you can specify limits on huge-pages or GPUs as well. However, when bothdefaultanddefaultRequestare specified on these resources, the two values must be the same.

Here's the configuration file for a Pod that has one Container. The Container manifest specifies a CPU request of 500 millicpu and a CPU limit of 800 millicpu. These satisfy the minimum and maximum CPU constraints imposed by the LimitRange.

apiVersion: v1

kind: Pod

metadata: